Today’s guest blog post is about MDM for SAP.

At the heart of every organization is their Enterprise Resource Planning (ERP) systems, that organizations install to simplify and integrate their business operations like supply chain, finance, human resource, procurement, and marketing into a cohesive system.

The databases stored in the ERP systems come from multiple sources and geographies, also varying upon the type of datasets that organizations need to maintain. The datasets help the departments to draw analytics and project the growth and opportunities that and growth of the company.

A Deloitte report states that “data migration and generative AI are key growth areas, with 80% of global business leaders believing AI will boost business efficiency”. With technological advancements, data migration often becomes a challenge for the organizations, yet it stands out to be an important step that companies need to take up, for efficient ERP implementation, for maintaining the data quality. Hence, the process needs careful planning and execution, to avoid any data inaccuracy and inconsistencies.

Master data is the fundamental business data like customers, products, suppliers, employees, and financial accounts that fuel an organization’s daily operations. Inappropriate data migration can result in data inconsistency, business disruption, and expensive delays. Thus, it’s crucial to plan and implement master data migration meticulously.

In this guide, we will discuss the best practices, strategies, and important considerations to make your master data migration to your new ERP system smooth and successful.

What is ERP Master Data Migration?

ERP Master Data Migration is the activity of migrating important data from old systems (or other data sources) into the new ERP system, when there are any upgradations announced in the ERP software. Gartner reports that “75% of ERP strategies are not strongly aligned with overall business strategy, leading to confusion and lackluster results.”

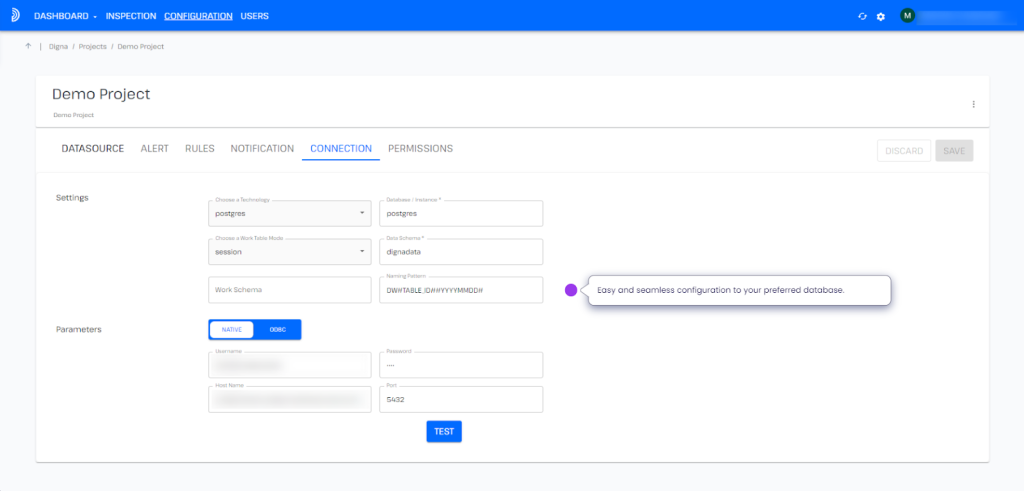

Like SAP ECC migration to SAP S/4 Hana, there are steps that organizations can take to build their SAP master data management [learn more]. It includes extracting, transforming, cleansing, and loading (ETL) the data into the new ERP environment with the assurance that it is accurate, consistent, and in alignment with the business needs.

Why is Master Data Migration Important?

The quality of your data will reflect the success of your ERP implementation. Correct master data guarantees:

- Operational Efficiency: With dependable and uniform data, business operations such as procurement, sales, inventory, and accounting work harmoniously.

- Data Consistency: Master data migration ensures the new ERP system works with harmonized and standardized information.

- Compliance: For industries where they must follow rules, data integrity is paramount to handle audits and compliance with rules.

- Decision Making: Quality data facilitates proper reporting and quality decision-making.

Major Challenges of ERP Master Data Migration

Prior to discussing the best practices, let us mention the major challenges most organizations encounter while performing the ERP master data migration:

- Data Quality Issues: Unreliable, stale, or erroneous data in legacy systems.

- Complicated Data Structures: Legacy systems can have various data formats, which are to be mapped to the new ERP system.

- Integration across Multiple Systems: Data migration from multiple unrelated systems can be a challenge of integration.

- Disruption to Business: Incomplete or incorrect data migration can result in operational downtime and business process delay.

ERP Master Data Migration Step-by-Step Guide

Master data migration to a new ERP system is a business-critical process that must be properly planned, executed, and verified to maintain business continuity and data integrity. This detailed guide dissects the critical steps in effective ERP data migration.

1. Planning and Preparation

The key to a successful migration is careful planning. In the absence of a solid plan, the migration process itself can soon turn chaotic and result in problems such as data loss, inconsistencies, or delays. Here’s how you can lay the groundwork for a seamless migration:

- Scope and Data Requirements: Identify the kind of data that must be migrated customer information, financial data, product information, inventory, vendor information, etc. Determine the source systems where this data is located now and decide if all data must be migrated at one time or in phases.

- Assess Data Quality: Conduct a thorough review of the existing data to assess its quality. Look for discrepancies, outdated information, duplicate records, and gaps. This is the time to clean up the data before it enters the new system, as poor-quality data can have far-reaching consequences in the ERP system.

- Set Clear Objectives: Define clear objectives for migration. Whether it is enhancing data accuracy, simplifying processes, or facilitating improved analytics and reporting, your objectives will inform the migration strategy. These objectives will also inform the assessment of the success of the migration process.

- Create a Budget and Timeline: Set a firm timeline for migration, accounting for testing, data validation, and potential holdups. Be sure that you have sufficient time and budgetary resources to conduct the migration with success.

2. Data Transformation and Mapping

Once you have a complete picture of the data that needs to be migrated, the second step is mapping and reshaping it to the form of the new ERP system. This makes sure that the data from all the different legacy systems fits perfectly with the fields and format in the new ERP system.

- Data Mapping: Determine how each data element of the old system will be represented in the new system. Customer names and addresses in the old system, for instance, can be broken down into several fields in the new system, such as first name, last name, and address. It’s important to map all data fields meticulously to ensure accuracy.

- Data Transformation: This is the process of transforming data into a compatible form for the new ERP system. It can entail normalizing values (e.g., product codes to a standardized format) or altering the format of dates and addresses. Data transformation ensures data from different sources integrate well and consistently into the new system.

- Data Enrichment: Missing data or out-of-date records must sometimes be filled in or updated prior to migration. Data enrichment can involve the addition of missing customer details, the updating of out-of-date financial records, or ensuring data consistency between data sets.

3. Data Cleansing and Validation

Data cleansing is a critical step that provides for the quality of the data to be migrated. Clean data results in more credible reporting and better decision-making.

- Remove Duplicates: Determine and remove any duplicate records so that the new system will not get duplicate data, which will lead to confusion and inefficiency.

- Correct Inaccurate Data: Carefully scan data for inaccuracies. This could involve correcting wrong addresses, outdated prices, or incorrect product descriptions. It is important to have accurate data for seamless operations in the new ERP system.

- Validate Data: Validate the data against business rules and ensure it is of high quality before migration. This could be done by involving major stakeholders or department heads in validation of the accuracy of the data. Validating data ensures that data migrating is accurate and useful.

4. Migration Execution

After data cleansing, transformation, and mapping have been done, the final thing to do is to perform the migration. It entails transferring data from old systems to the new ERP system.

- Test Migration: Always begin with a test migration in a sandbox or non-production environment. This will enable you to mimic the migration process and see if there are any problems prior to the actual migration. Test the migration with a subset of data so you can ensure data integrity and mapping accuracy.

- Full Migration: After successfully completing the test migration and having rectified all the issues, go ahead with the full data migration. Depending on the complexity and volume of the data, the migration can occur in stages—moving data for one department at a time to avoid risk and reduce downtime. It is also possible that a phased process can be used to identify and resolve issues early.

- Data Migration Tools: Leverage the use of specialized migration tools and services to automate the migration process. These tools can streamline data transfers, minimize manual errors, and accelerate the migration process.

5. Post-Migration Testing and Monitoring

Once the data has been successfully migrated, it’s critical to conduct thorough testing to verify that everything functions as anticipated. This stage entails checking the integrity and functionality of the migrated data and ensuring that the new ERP system runs smoothly.

- System Validation: Verify all aspects of the new ERP system that depend on the data that has been migrated, such as reporting, system integrations, and entry forms. This confirms that the system is doing what it’s supposed to, and that the data is properly reflected throughout the system.

- User Acceptance Testing (UAT): Involve end-users and stakeholders to verify the usability and accuracy of data in the system. UAT confirms that the new system complies with the business needs and that users are at ease handling the migrated data. It’s critical to obtain key users’ sign-off of the readiness of the system before going live.

- Monitor for Problems: Post-migration, keep monitoring the system for any performance problems, data inconsistencies, or user complaints. Have a good communication channel with end-users to report and correct any problems in a timely manner. Keep an eye on system performance, integrations, and data streams to make sure that everything works as expected.

6. Training and Support

Training and assistance are vital to familiarize the users with the new ERP system and to settle post-migration issues at the earliest.

- End-User Training: Perform training classes for all employees involved to acquaint them with the new ERP system and how to handle the migrated data. From basic data input to sophisticated reporting functions, everything needs to be trained on. Hands-on training sessions enable the users to efficiently move around the system and cut down the learning curve.

- Ongoing Support: Provide post-migration support to resolve issues that occur after the migration. This could involve establishing a help desk, offering FAQs, or providing troubleshooting material. Ongoing support will allow any unforeseen problems to be resolved as soon as possible, maintaining operation smoothness.

- Documentation: Offer extensive documentation that explains new workflows, data access methods, and system features. Documentation will act as a guide for users and facilitate consistency in data handling within the new ERP system.

Best Practices for Successful ERP Master Data Migration

- Involve Key Stakeholders: Engage key business stakeholders in the migration process. Their feedback will ensure that the data migration is business-oriented and minimizes resistance to change.

- Use Automated Tools: Utilize data migration software and tools to automate and simplify the migration process. These tools can minimize errors, save time, and provide improved data consistency.

- Backup Data: Always backup your data prior to starting the migration process. This will give you a rollback strategy in case of failure.

- Establish a Governance Framework: Set well-defined roles and responsibilities for data management prior to, during, and after the migration.

- Prioritize Data Quality: Prioritize data quality from the start since bad data will destroy the ERP system’s success. Clean, correct, and standardized data are essential for smooth migration.

Conclusion

Master data migration is an important activity in implementing or replacing an ERP system. By planning your data meticulously, mapping it, cleansing it, and validating it, you can steer clear of typical pitfalls and guarantee that your new ERP system runs smoothly and effectively. Data migration to a new ERP system calls for a systematic approach, the appropriate tools, and cooperation from all stakeholders involved. Through adherence to the best practices established in this guidebook, you can make your ERP master data migration smooth and position your organization for future success.

Have you ever wondered how to effectively evaluate the return on investment (ROI) of a Product Information Management (PIM) and Master Data Management (MDM) implementation? Then, take a look at some real-life examples. Download the

Have you ever wondered how to effectively evaluate the return on investment (ROI) of a Product Information Management (PIM) and Master Data Management (MDM) implementation? Then, take a look at some real-life examples. Download the

MDM solutions have been instrumental in solving core data quality issues in a traditional way, focusing primarily on simple master data entities such as customer or product. Organizations now face new challenges with broader and deeper data requirements to succeed in their digital transformation. Help your organization through a successful digital transformation while taking your MDM initiative to the next level. Download the

MDM solutions have been instrumental in solving core data quality issues in a traditional way, focusing primarily on simple master data entities such as customer or product. Organizations now face new challenges with broader and deeper data requirements to succeed in their digital transformation. Help your organization through a successful digital transformation while taking your MDM initiative to the next level. Download the  Businesses today face a rapidly growing mountain of content and data. Mastering this content can unlock a whole new level of Business Intelligence for your organization and impact a range of data analytics. It’s also crucial for operational excellence and digital transformation. Download the 1WorldSync and

Businesses today face a rapidly growing mountain of content and data. Mastering this content can unlock a whole new level of Business Intelligence for your organization and impact a range of data analytics. It’s also crucial for operational excellence and digital transformation. Download the 1WorldSync and